- Title

-

3D Domain Adaptive Instance Segmentation Via Cyclic Segmentation GANs

- Authors

- Lauenburg, L., Lin, Z., Zhang, R., Santos, M.D., Huang, S., Arganda-Carreras, I., Boyden, E.S., Pfister, H., Wei, D.

- Source

- Full text @ IEEE J Biomed Health Inform

|

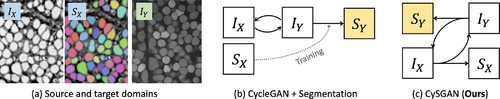

Overview of the task and methods. ( |

|

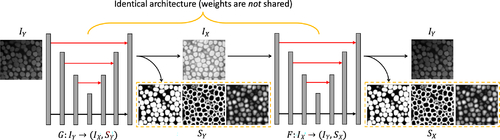

Architecture details of CySGAN. Given an image sampled from |

|

Different segmentation losses for two domains. ( |

|

Restore augmented regions with an adapted cycle-consistency strategy. We show four consecutive slices of ( |

|

Visualization of the NucExM dataset. We sample a sub-volume of size ( |

|

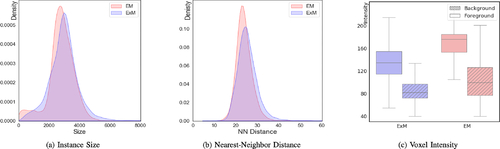

Statistics of the source (EM) and target (ExM) datasets. We show the distribution of ( |

|

Visual comparisons of segmentation results. ( |